Let us know about free updates

Simply sign up for myft AI digest and it will be delivered directly to your inbox.

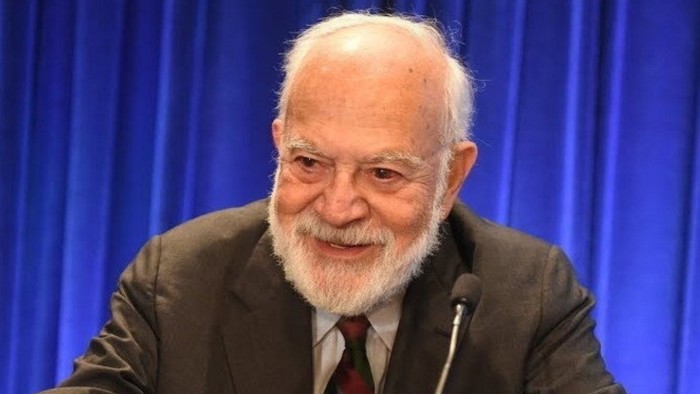

According to philosopher Harry Frankfurt, lies are not the greatest enemy of truth. Bulldy is even worse.

As he explained in his classic essay on Bullsit (1986), the Liar and Truth Terror play the same game on the other side. Each responds to facts when they understand them, accepting or rejecting the authority of truth. But the bullsitter ignores these requests completely. “He does not reject the authority of truth like a liar and disagrees with it. He pays no attention to it at all. This means that bullshit is the greater enemy of truth than lies.” Such a person wants to persuade others, regardless of fact.

Sadly, Frankfurt passed away in 2023. This is just a few months after ChatGpt was released. However, reading his essays in the age of generative artificial intelligence causes creepy familiarity. In some respects, Frankfurt’s essays neatly explain the output of major AI-enabled language models. They don’t have a concept about it, so they don’t care about the truth. They work by statistical correlation rather than empirical observations.

“Not only their greatest strength, but their greatest danger, their ability to make prestigious noises on almost every topic, regardless of their practical accuracy. In other words, their superpowers are bullshit superhuman abilities.” Do two professors at the University of Washington run online courses on modern Oracle and bullshit machines? – A scrutiny of these models. Others have renamed the machine’s output as Botsit.

One of the most famous, unsettling, but sometimes interesting creative LLMS features is the “hatography” of fact. Some researchers argue that this is an inherent feature of the stochastic model and is not a bug that can be fixed. However, AI companies are trying to solve this problem by improving the quality of their data, tweaking models, and building them with validation and fact-checking systems.

But it appears there is a way out of some way, given that humanity’s lawyers told California court this month that their law firm unintentionally submitted a false quote hallucinated by AI company Claude. Just as Google’s chatbots flag users, “Gemini can make mistakes about people, so double check it out.” That didn’t stop Google from deploying “AI mode” to all major U.S. services from this week.

The very ways these companies try to improve their models, such as reinforcement learning from human feedback, put bias, distortion, and the introduction of undeclared value judgments. As FT has shown, AI chatbots from Openai, Anthropic, Google, Meta, Xai and Deepseek describe the quality of their company’s CEO and rival executives very differently. Elon Musk’s Grok responded to completely unrelated prompts and promoted a meme about South Africa’s “white genocide.” Xai said it fixed the glitch. It denounced “illegal changes.”

According to Sandra Wachter, Brent Mittelstadt and Chris Russell, such models create a new, worse category of “careless speech” in an Oxford Internet Institute paper, according to Sandra Wachter, Brent Mittelstadt and Chris Russell. In their view, careless speech can cause intangible, long-term and cumulative harm. It’s like “invisible bullshit” that makes fun of society, Wacker tells me.

At least for politicians and salespeople, we usually get to understand their motivations. However, chatbots are not intentional, not authentic, but optimized for validity and engagement. They invent facts without purpose. They can pollute the human knowledge base in immeasurable ways.

An interesting question is whether AI models can be designed for greater truthfulness. Is there any market demand for them? Or should model developers be forced to adhere to higher standards of truth, such as those applied to advertisers, lawyers, and physicians? Wachter suggests that developing a more truthful model requires time, money and resources that current iterations are designed to preserve. “It’s like a car wants to be an airplane. You can push it off a cliff, but you don’t resist gravity,” she says.

That said, the generative AI model remains useful and valuable. Many lucrative businesses, and political careers, are based on bullshit. Properly used generation AI can be deployed for countless business use cases. However, it is delusional and dangerous to mistake these models for true machines.

john.thornhill@ft.com